INSPIRATION

Bridging the gap

Only 63 percent of ELLs graduate from high school, compared with the overall national rate of 82 percent. Of those who do graduate, only 1.4 percent take college entrance exams like the SAT and ACT ("English Language Learners: How Your State Is Doing," NPR).

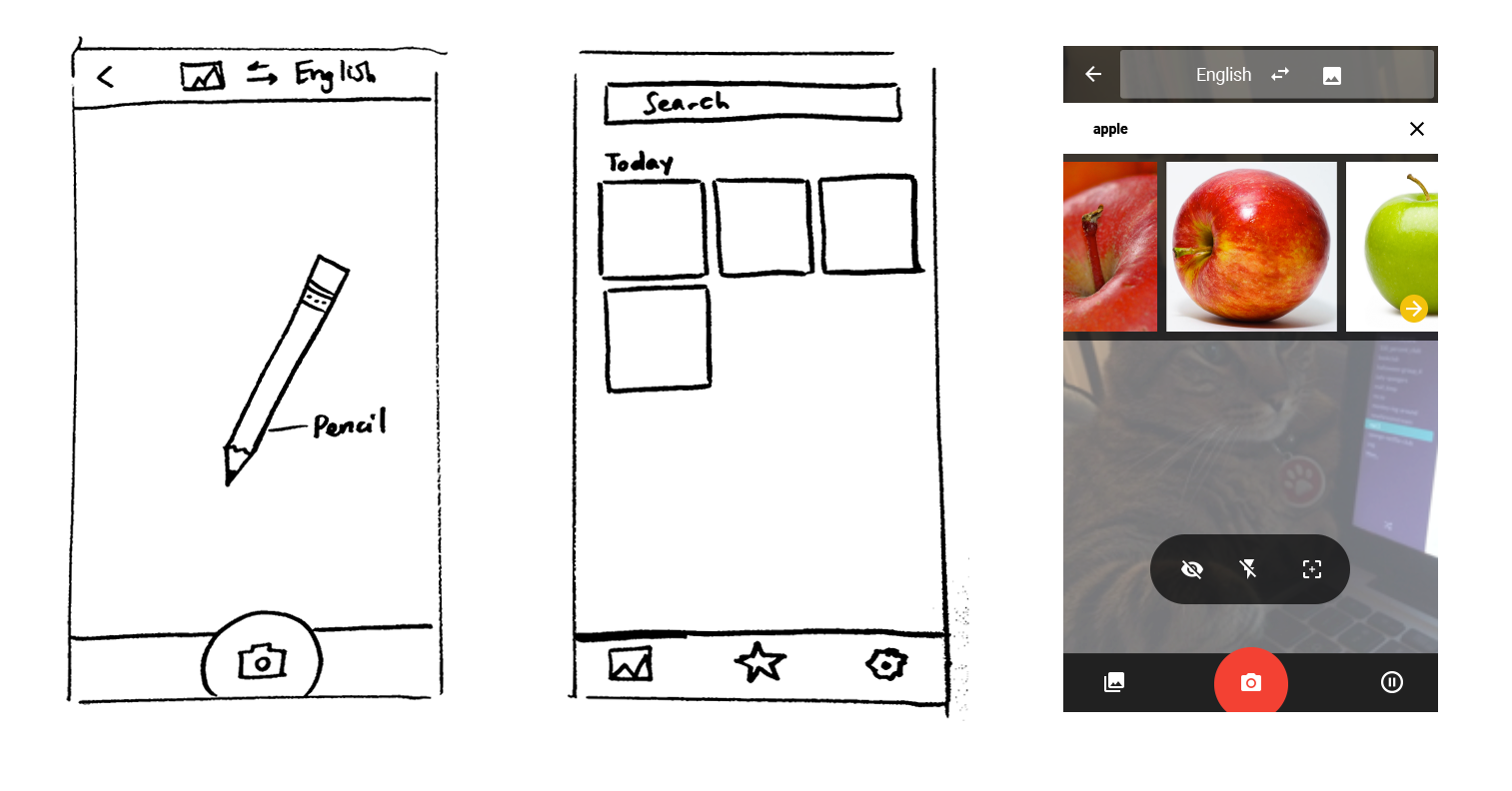

Technology can't fix a broken education system, but maybe it could provide relief for students who need help.

Why Google?

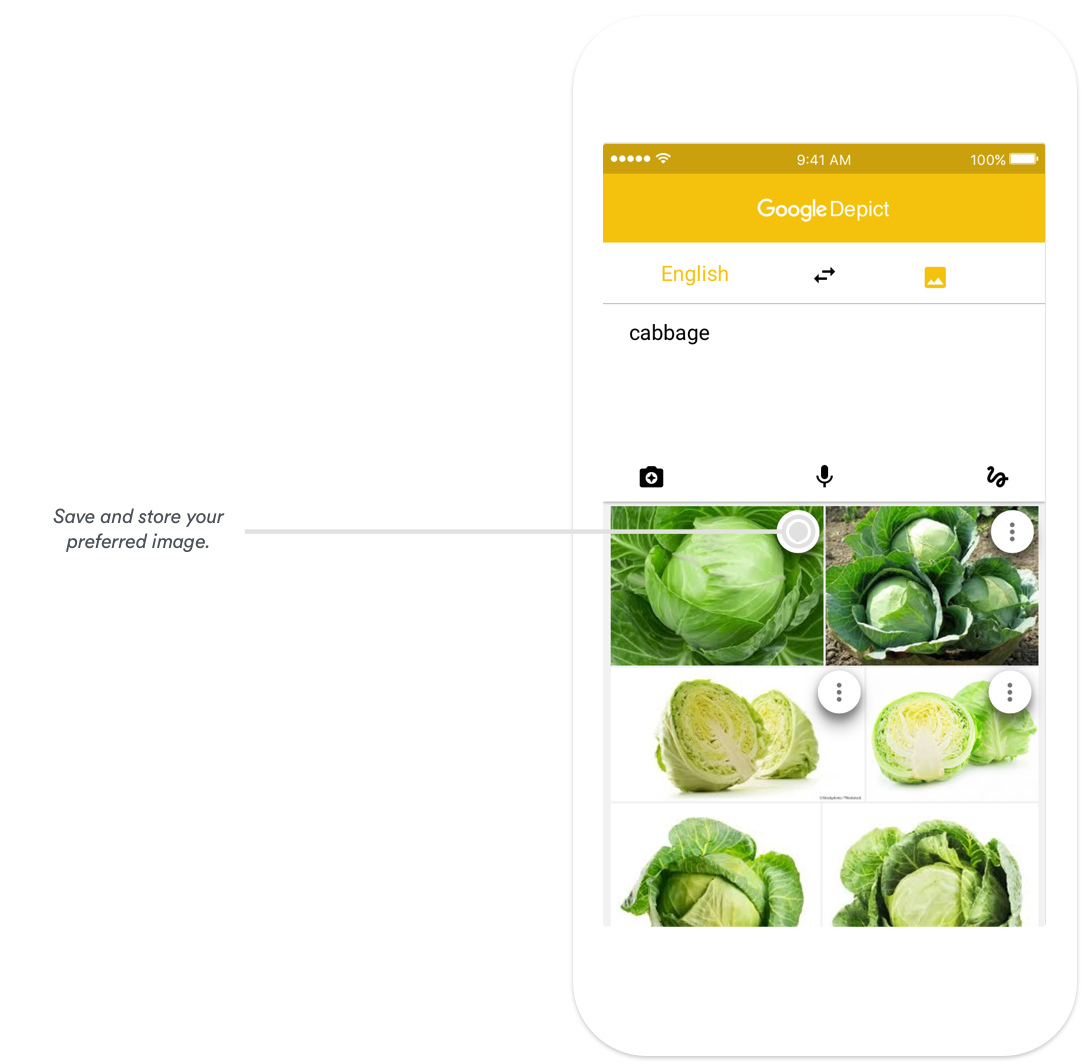

Google seemed like an obvious choice for this app. Not only do they have all the existing infrastructure, such as a robust image recognition API and Google Images, the most comprehensive image search on the web, but they also have a demonstrated commitment to education and expanding access to learning.